Do you yield?

I’m gonna sound like a broken record, but I love watching the innovation cycle that happens. As new metrics and tooling emerge, new opportunities are discovered and new standards and techniques start to become common place.

I particularly love it when we can see those changes happening at scale through improvements to the platform, or improvements to tools that are used across the web.

We’re seeing that with Interaction to Next Paint right now.

Google Publisher now provides an adYield config setting that people can use to tell Google Publisher to yield to the main thread while getting your ads all setup. They’re using scheduler.postTask() to accomplish this, which unfortunately means Firefox and Safari won’t get the benefit yet as neither have shipped it yet (though Firefox does have it available behind a flag), but at least Chromium-based browsers will benefit.

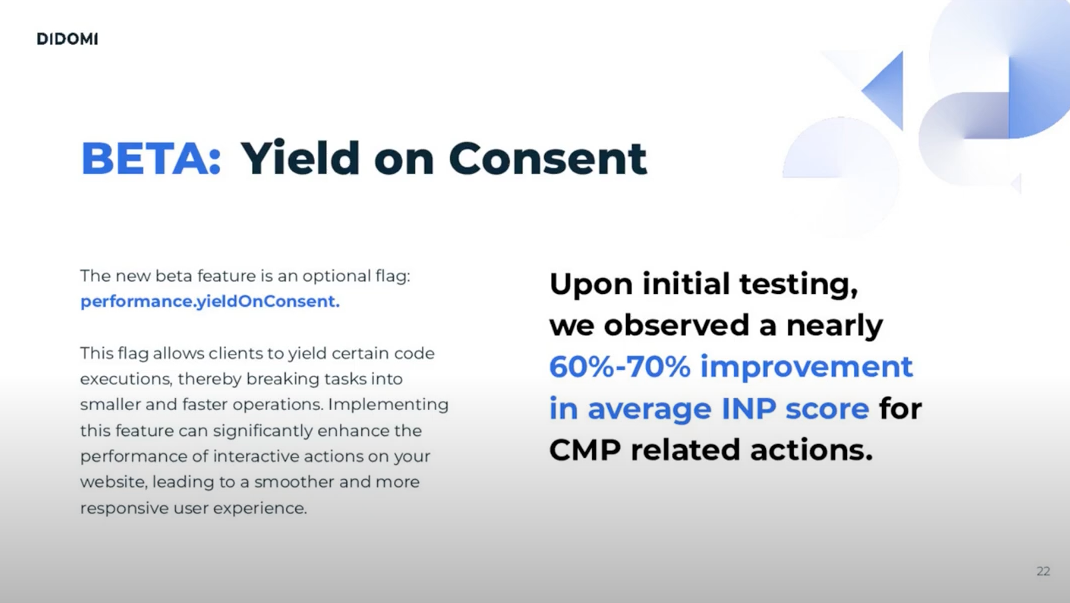

Didomi, a consent management tool, also recently talked about how they used INP data to help them improve the performance of their tool. They, too, introduced a flag (performance.yieldOnConsent ) that lets users opt into yielding to the main thread during code execution.

This approach, breaking your code into chunks of execution, then periodically yielding to the main thread, is one that can quickly cure a lot of INP ills. And it doesn’t have to be fancy.

For a recent client of mine, we created a simple helper method checks for scheduler.yield() support and, if that’s not around, uses a 0 second setTimeout instead. We rolled it out for a lot of their analytics calls that had previously been blocking, and saw anywhere from a 10%-60% reduction in INP right away.

Super simple concept, super impactful results.

Closures and memory

The web is littered with memory issues—we just don’t have the metrics and tooling quite yet to make it obvious at scale. So anytime anyone starts sharing information about memory related performance issues, I’m all ears.

Jake Archibald wrote a great overview of a surprising memory leak that occurs when a reference lies within a function that is no longer callable, but the original scope still is.

That sentence is probably as clear as mud, but that’s where his article comes in handy. Jake does a great job of explaining the how and why this occurs so you can keep an eye out for it in your own codebase.

CSS Performance Wins By Not Bundling

For years, combining all your CSS into a single stylesheet was something that we all advised doing for performance. But then HTTP/2 came along with its multiplexing and prioritization and changed everything.

While a lot of folks have already made the shift to a more modular approach with several smaller stylesheets, there are still quite a few sites out there using the older approach.

Gov.uk (who have a history of doing a lot of great performance work and sharing the information) have published a great article about how they recently shifted from that single file approach to using several more modular CSS files.

They’ve seen some nice wins as a result (as measured using SpeedCurve on an emulated Mobile 3G device:

- Start Render – 4% improvement from 2.3s to 2.2s

- Largest Contentful Paint – 7% improvement from 2.53s to 2.35s

- Last Painted Hero – 7% change from 3s to 2.8s

I particularly like that they touched on the caching benefits of smaller files, as I feel like that is one upside that tends to get overlooked. When you make a change to your CSS, the browser shouldn’t have to go out and get all the CSS just because one line changed. By having several smaller files, you let the browser keep the majority of the CSS in cache, and only re-download the file that has the change in it. That alone can be a hefty boost.