💤 Another win for boring technology

This past weekend folks kind of lost it over how fast the McMaster-Carr site is. There was a tweet asking how a company 100+ years old had the fastest site on the internet.

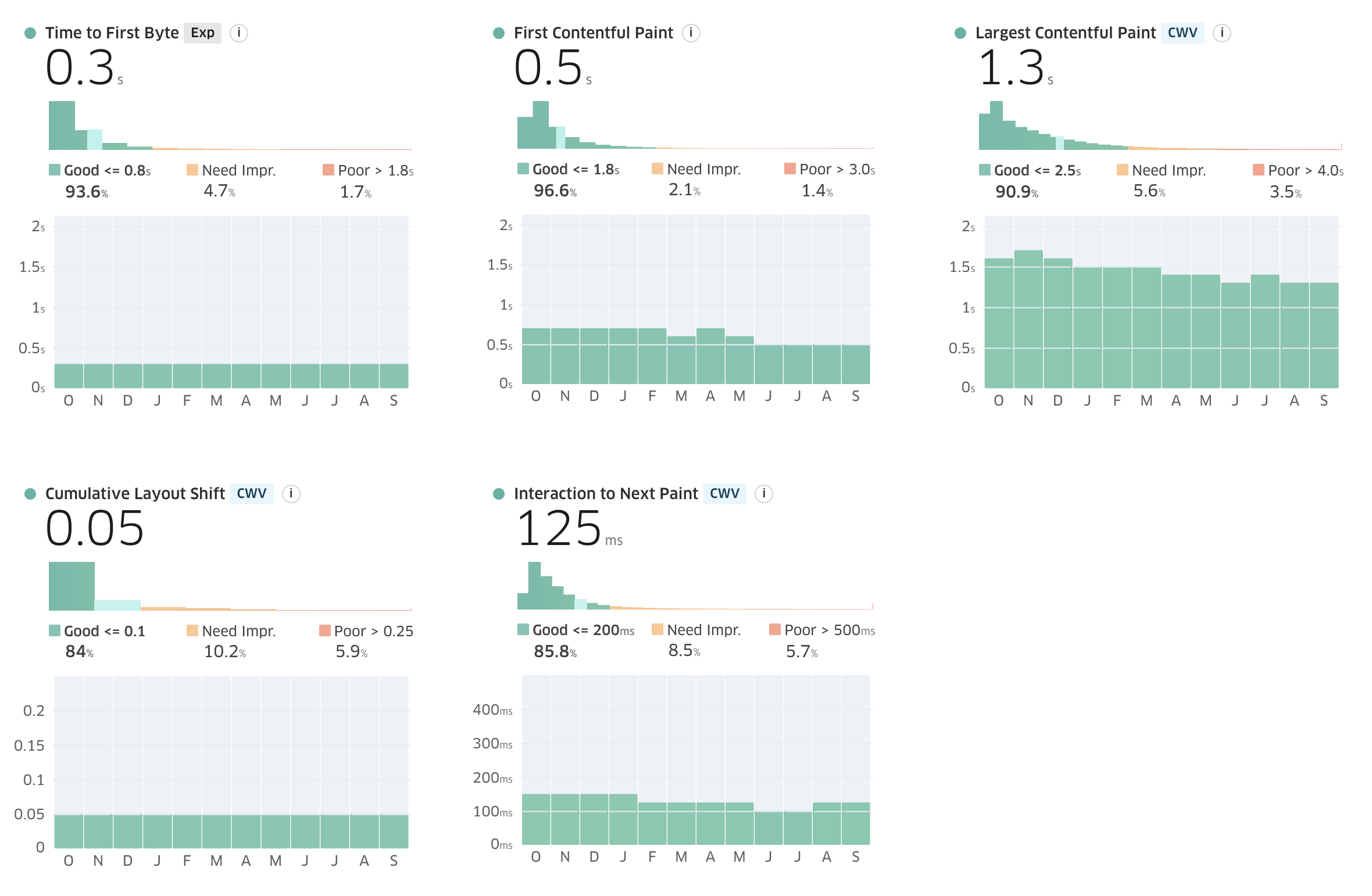

And it wasn’t really hyperbole. The site is incredibly fast. I mean, just look at all that green in their CrUX data.

Wes Bos put together a nice video walking through exactly what they’re doing to make the site so fast. There’s a lot going on here with preloads, prefetching links on hover, a service worker, etc.

The tech stack isn’t anything close to “modern”. They’re ASP.net pages, using YUI (!!) and jQuery for their JavaScript. It’s retro 1999.

We talk a lot about tech debt with old codebases. They grow and sprawl and become unwieldy as time goes on. And while that’s often the case, the other side of that is that, if you’re given the time and space to actively work on them, you can fine-tune them and give them the spit and polish they need to work very, very efficiently.

That’s the other part of this conversation. It’s not just that they’ve opted to stick with their tried and true tech stack for years while many other companies have jumped on the latest and greatest and had to re-do their architecture every few years.

It’s that the site itself is hyper focused on providing what their users need, and nothing more. It isn’t bogged down with ads or bells and whistles that users don’t even need. It’s an online parts catalog, hyper focused on efficiency. It’s fast and easy to get to the parts you need, no matter how old the device you’re using. The performance of this site is derived as much from decisions about the user experience as it is from the technological decisions.

I made the case awhile back in a talk in London that perhaps we should redefine performance to be “how efficiently can users accomplish their goals”. This is the kind of output that happens when a company takes that to heart.

🔙 From React to Web Components

The Microsoft Edge team has been working for awhile on improving the performance of their UI by migrating away from React, and instead relying on a more modern web components approach.

The early results were great, with Edge reporting a 42% faster UI for Edge users. Even better, they reported that it was 76% faster on lower-end devices (devices without SSD or less than 8GB RAM). That’s not too surprising—heavy JS reliance is a premium tax that only high-end devices can actually afford.

According to a new article on the New Stack, the goal is for 50% of the existing React-based UI’s in Edge to be migrated to web components by the end of the year. As Andrew Ritz (lead of the Edge Fundamentals team) put it:

“We realized that our performance, especially on low-end machines, was really terrible — and that was because we had adopted this React framework, and we had used React in probably one of the worst ways possible.”

That’s not exactly surprising. React has been a performance bottleneck since, well, since day one. The team itself admitted as much in an early discussion about the framework:

People started playing around with this internally, and everyone had the same reaction. They were like, “Okay, A.) I have no idea how this is going to be performant enough, but B) it’s so fun to work with” Right? Everybody was like, “This is so cool, I don’t really care if it’s too slow—somebody will make it faster.”

People have worked to make it faster, but it’s hard to dig yourself out of a hole that deep.

Despite recent controversy, I’m pretty convinced at the moment that web components are a core part of the next wave of web technology. I’ve used them on several projects at this point and the output has been great—playing with the web’s foundation instead of fighting against it has resulted in consistently excellent performance. There’s work to be done, for sure, on helping make the development process more ergonomic, but building using web components feels a bit like what I want the future to feel like.

🛠️ Ditching JavaScript in Build Tools?

I really enjoyed this post from Nolan Lawson discussing a trend of starting to rewrite JavaScript tools in other, “faster”, languages.

The point that a rewrite is often faster simply because it’s a rewrite is a very valid one—over time we add more features/functionality to our code and it starts to have a cost not just on perf, but on maintainability as well. A rewrite lets us start with those learnings already in mind.

But my favorite point is around the accessibility of JavaScript tools built in JavaScript:

For years, we’ve had both library authors and library consumers in the JavaScript ecosystem largely using JavaScript. I think we take for granted what this enables.

I wrote about this a few years back, but having JavaScript available on the front-end, back-end, on the edge, and in build tools is a powerful way to let developers extend their reach into different part of the tech stack and any decision to move away from that needs to be VERY carefully considered.